Summary

- This blog elaborates the major technical problems a human detection solution encounters: Viewpoint variation, Pose variation, and occlusion.

- Understand how to use Anchor boxes and multiple feature maps to manage viewpoint variation.

- Learn how to manage the imbalance of variations in the training set for Pose Variation problems.

- Understand how to handle Occlusion problems in general and Non-maximum suppression in particular.

- Check out the VisAI People Platform optimized for the edge & with better privacy options for the people you detect

written For

- Engineer and project managers who are in the evaluation stage for identifying the right People Detection system for their specified product/use-case

Introduction

Generally, AI-based models work better when we use a localized and controlled environment in training the model. But the significant advantage of using an AI solution is its generalisability. It can be trained in a different environment with useful data variations and deployed in a different environment with decent accuracy. We must teach the AI model to learn the target object’s features rather than the environment’s features.

Deep learning solutions are data-hungry; the more the data, the more the detection model’s accuracy. But not everyone has the benefit of having millions of data. Hence one has to figure out a specific use case for the type of problem that can be solved from the data they used for training rather than targeting a generic solution.

There are lots of issues that exist from the detection model.

- Viewpoint variation

- Pose variation

- Occlusion

Viewpoint variation

As the object’s viewpoints vary, the object’s shape changes drastically, which also changes the object’s features, and this causes the detector trained from one perspective to fail on other viewpoints.

For example, if you train the sensor on a video-surveillance view, it might look for multiple human parts combinations like the head, upper body, and lower body to conclude it’s a person during training. This detector will fail if we place a camera overhead because the sensor can only view the person’s head. Hence the detection accuracy will be inferior.

One more problem with viewpoint is the same person can be far from a camera or nearby. As a result, the size of objects varies. The detector is therefore required to be size invariant.

To address this problem, most detectors in deep learning models employ specific techniques. One such technique is the selection of anchor boxes.

What is an Anchor box?

Instead of directly predicting bounding box coordinates, the model predicts off-sets from a predetermined set of boxes with particular height-width ratios. Those predetermined sets of boxes are the anchor boxes. The anchor boxes’ shapes and sizes are selected to cover various scales and aspect ratios.

One more technique we would like to discuss here is the usage of multiple feature maps. When the detection and tracking are taken from only the final Convolution neural network (CNN) layer, only large size objects will be detected. Because smaller objects tend to lose their feature information during the down-sampling that happens in the pooling layers.

Some detectors like YOLO detect multiple network levels (meaning it preserves the features in the earlier CNN layers where the smaller object features present). This technique will improve the detections of smaller objects or objects of a different scale to a good extent. This technique is also called FPN (Feature Pyramid Network).

Pose variation

Many objects of interest are not rigid bodies. They can be deformed in powerful ways. For example, in the human detection solution, the person can be in different kinds of pose variations like walking, standing, running, sitting, doing any activities, etc. The features of the person in these pose variations vary drastically.

If the object detector is trained to detect a person with training images that only included standing, moving, or walking individuals, it may not be able to track individuals shown in the pictures below since the features in these images may not match the ones that it learned about people during training.

The training set must include all these variational inputs to detect all kinds of pose variations in the solution. The imbalance of variations in the training set is another technical issue that appears though we have included the variational images. We could not get possibly equal numbers of images in different pose variations from the public domain.

One can quickly get several images of a person standing or walking, whereas getting an equal number of certain sport activities images from public datasets is generally challenging. While training, the model will give more weightage to the standing scenarios. It would be fair if you were careful in maintaining the right balance in the training dataset.

Occlusion

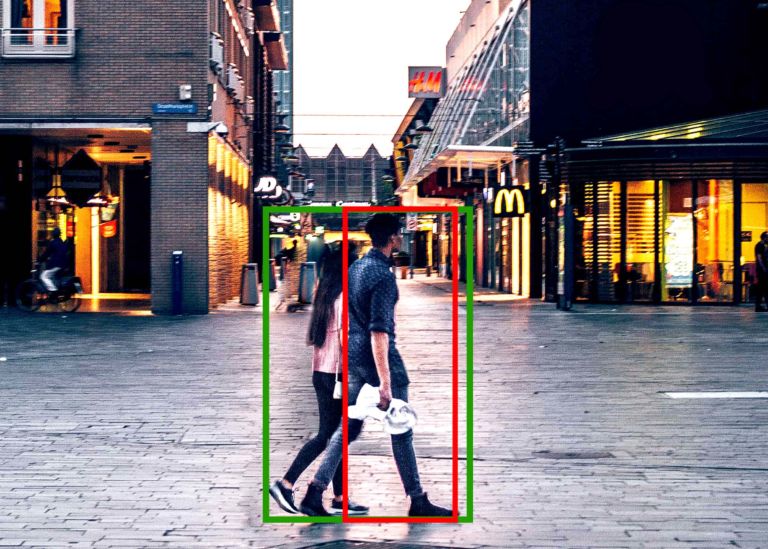

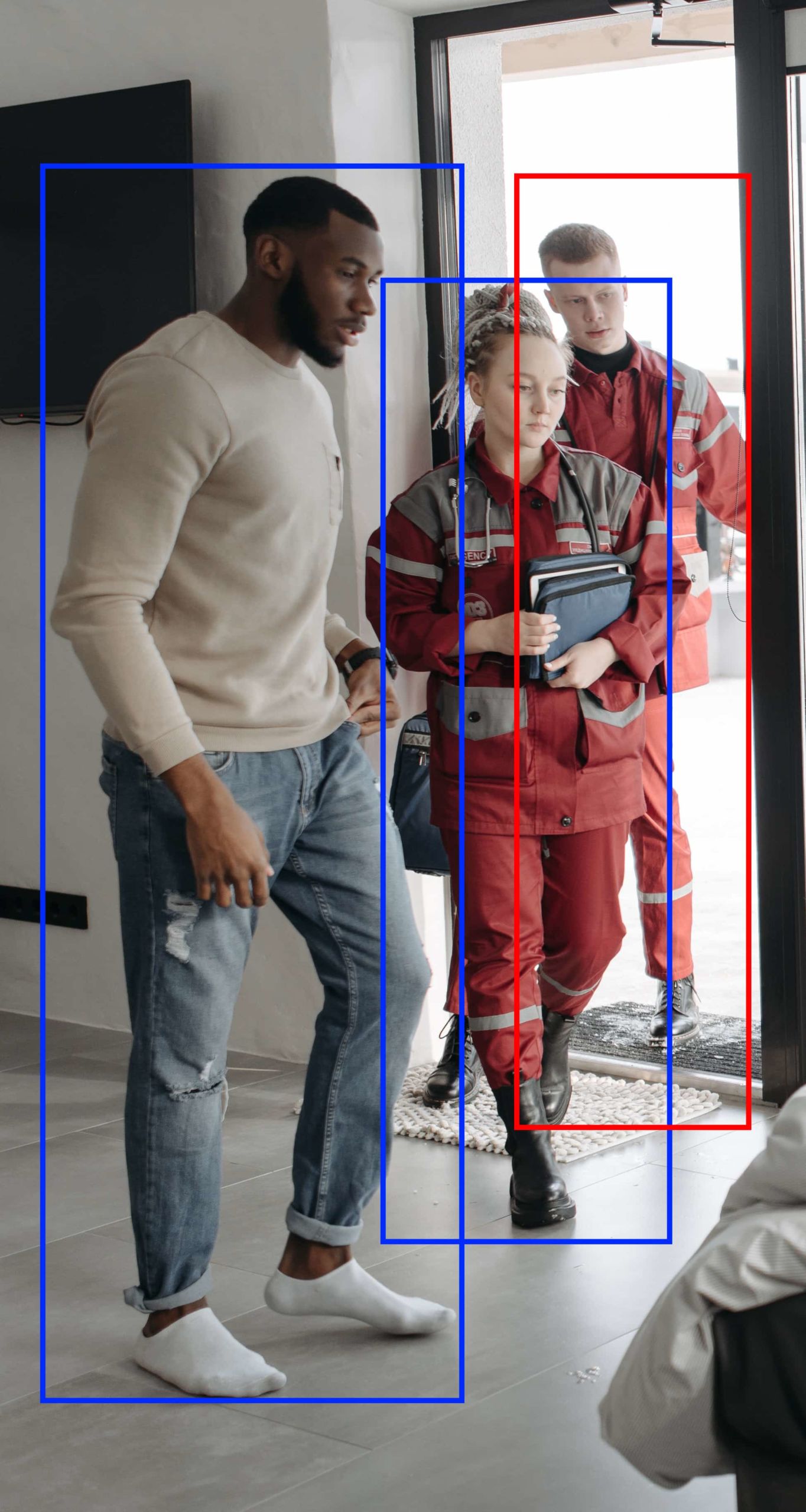

Occlusion is another major problem for human detection in general. Some scenarios include when two or more persons come too close, or when the object occludes a person in front, or when a chair partially occludes a person. In these scenarios, the object of interest seemingly merges. These scenarios are called occlusion.

By this occlusion problem, the features extracted from them are not strong enough to say that they are objects. Because of this occlusion problem, detecting persons in a crowd scenario is becoming furthermore tricky.

An alternate way to understand the occlusion problem is using the IOU concept are as follows

- If two object’s bounding boxes have Zero IOU (IOU=0), we can say that there is no occlusion.

- If two object’s bounding boxes have One IOU (IOU=1), we can say that another completely occludes one object or thing.

- If two object’s bounding boxes have IOU between 0 and 1 (0 < IOU < 1), then we consider the object is partially occluded, or there might be a complete occlusion if the object is small.

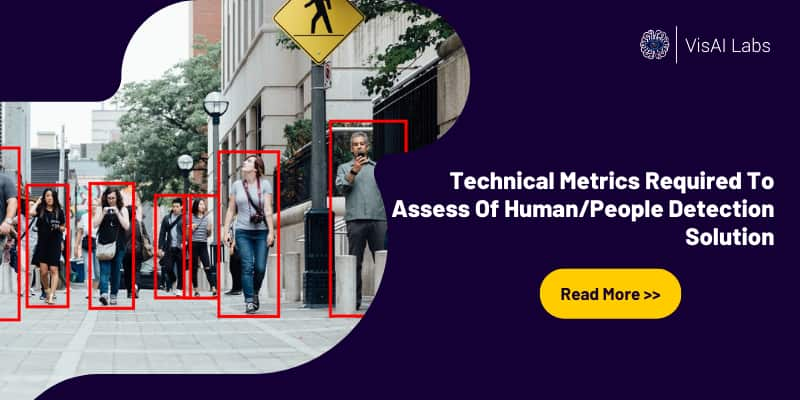

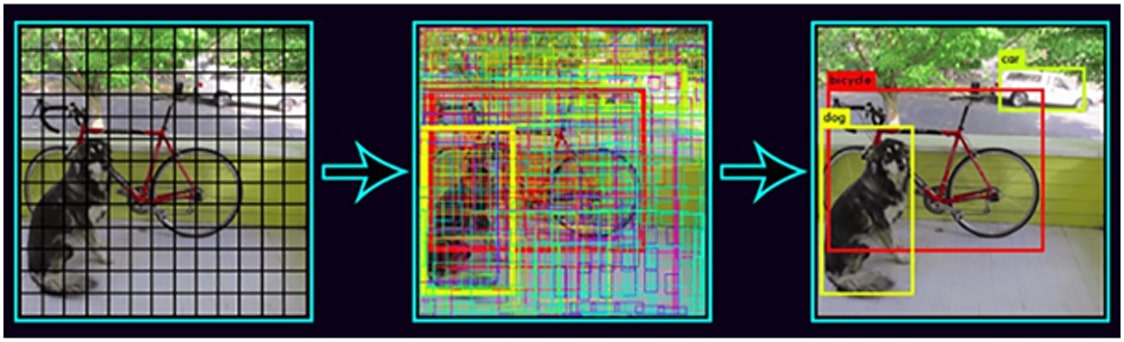

Another technical difficulty in dealing with occlusion problems is using NMS (Non-maximum suppression) in post-processing. Any object detection model ends with the NMS layer for suppressing the bounding box proposed by different model output grids. You can understand the reason for using NMS from the below image,

The first image shows the input image with grids (grid represents the output size of the model), here it shows a 13×13 grid, which means our model output layer size is 13×13. The second image shows the bounding box outputted by each grid, I.e., 13×13=169 bounding boxes. The third image shows the output after NMS, I.e., Suppressed the bounding box based on confidence score and IoU.

Suppressing based on confidence score is understandable, but what is suppressing based on IoU?

We will suppress or remove the bounding boxes with higher IoU with another box of the same class object. Which box to retain and which box to leave is decided based on the confidence score.

So, what’s the problem here for occlusion? There will be an overlap in the box; if that overlap IoU is above a threshold, we will suppress one box. If two objects overlapped with each other (front and back) and suppose our model has also outputted bounding box for each object (before NMS), this NMS logic may suppress one. So occlusion problem is difficult to deal with in this case.

Detecting a completely occluded object is impossible from a 2D image just from one camera view. One of the most straightforward techniques for better detecting partially occluded objects is to run inferencing on a higher resolution image. Thereby you can get a higher spatial resolution feature map at the output CNN layer.

As a result of this higher resolution feature map, multiple people are found in densely crowded regions. But this reduces the throughput due to computation overhead. You need to either go for high-performance hardware or you need to accept the low accuracy model!!.

Conclusion

Human detection and tracking processes are part of computer vision technology that helps locate and track humans in the video sequence to train AI models to perform various real-time tasks based on the business requirements.

Is there a problem in incorporating people tracking, detection, and recognition into your products? Talk to us.

VisAI Platform – Human/People detection Solution

VisAI Labs understands the fundamental necessity of edge-optimized human/people detection algorithms that satisfy a variety of use-cases and are pre-tested in different testing scenarios so that you can focus on the development of your product/solution.

VisAI Labs Human/People Detection Algorithms are designed for People Counting, Congestion Detection, Intrusion Detection, Social Distance Checker, Dwell Time Detection, and Building Tracking.

Feel free to reach us at sales@visailabs.com