Summary

- This blog provides a framework to help engineers and product managers design and test scenarios based on the particular people’s detection use cases.

- Understand the different ways how to identify camera testing parameters, target environment, and target scale parameters.

- Also Check Out: Technical Metrics used to assess a human/people detection solution

- Engineer and project managers who are in the evaluation stage for identifying the right People Detection system for their use case

Introduction

The New Wave Of Human/People Detection Technology

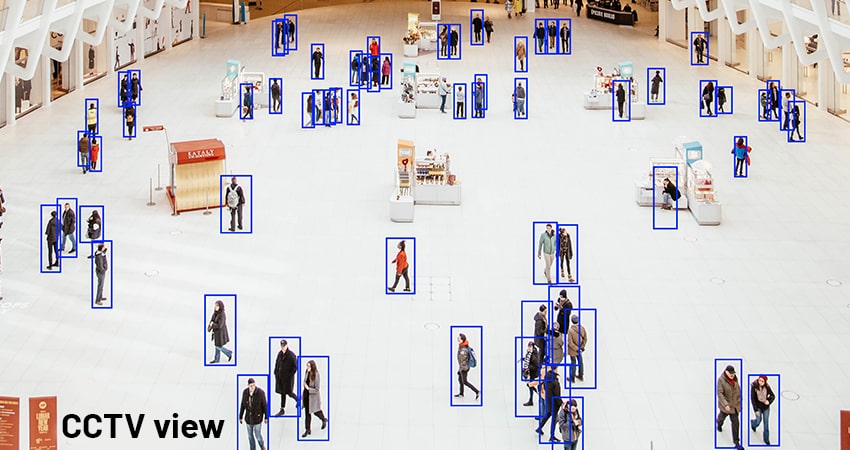

Watch Demo Video: How to do human/people counting from top FoV (Feild of view)

Selecting The Right Testing Scenario

Selecting the right test scenario is as crucial as selecting scenarios for training your person detection solution. Your test scenario would be on your actual target requirement, I.e., how you will utilize your people detection solution. So basically, your training scenario and testing scenario should be more or less similar to achieve good performance.

Camera Parameters

- Intrinsic or internal

- Extrinsic or external

Target Environment

Target Scale

Selecting The Right Testing Methodology

There are commonly two ways to perform testing:

Dataset-Based Testing

Dataset-based testing is the conventional approach of testing any object detection model. This method involves an enormous effort of generating Ground Truth (GT) for the test dataset. Once the GT is developed, testing the prediction result is just a click away. There are metrics available, such as Average Precision (AP) or mAP (mean Average Precision), as defined in the previous blog to compute the accuracy metric.

Invariance Tests: To check the model’s predictions’ consistency, we can introduce some changes on the input and check how the model reacts to the change; This is more related to data augmentation, where we apply changes to input during training while preserving the ground truth annotations.

Minimum Functional Tests: Minimum functional tests allow us to define a set of test cases with their expected behavior, and this will help us quantify the model performance in different scenarios.

Real-Time Deployment Testing

Motion-Based Sample Testing: Instead of processing every frame from the stream, we can use motion sensing. Meaning we can run our model and check its prediction correctness only when there is a motion detected. This way, we can reduce the human effort to monitor the system continuously.

Person Count Based Testing: Another approach is to count the number of persons detected by our model against some ground truth. This approach requires integrating a tracking solution, and they can link results to the tracker’s accuracy.

- Our model did not miss any prediction if the model predicted count matches the ground truth count from the IR sensor.

- If the model count is lesser than the ground truth, that means our model missed some prediction.

- If the model count is more than ground truth, that means our model predicted additional false positives.

Conclusion

Is there a problem in incorporating people tracking, detection, and recognition into your products? Talk to us.

Feel free to reach us at sales@visailabs.com