Summary

- Human Detection is done in two ways: Bound box detection and Segmentation approach

- The primary Accuracy metrics used are mean precision and mean Average precision

- Also Check Out: VisAI People Platform optimized for the edge & with better privacy options for the people you detect

- Product Managers and Engineers evaluating Human/People detection algorithms to identify the right fit algorithm for your use-case

Introduction

In recent years, human/people detection in a video-scene has attracted more attention due to its wide range of applications, including abnormal event detection, counting people in a dense crowd, individual identification, gender classification, and fall detection for older adults. The task of human detection in an array of aerial images is quite challenging due to various reasons.

This blog post addresses the accuracy metrics commonly used in assessing a human detection solution. Before getting into its details, let’s first understand the how and what of human detection.

It includes two primary elements when approaching human detection with the assistance of computer vision applications. The first is technical, like identifying an individual either in an image or a video. The second element is what you can do with those outcomes.

What Is Human/People Detection?

Human detection is the task of computer vision systems for locating the human beings present in an image or video; through this feature, we can customize the video and alert preference to decide whether you want to look after video and alerts for all motions. This human detection technique has two different approaches:

1. Bounding Box detection approach:

This approach detects the human with bounding box values as output. The bounding box is a rectangle over images, outlining the object of interest (in this case, Humans) within each image by defining its X and Y coordinates and Width and Height.

What is a bounding box?

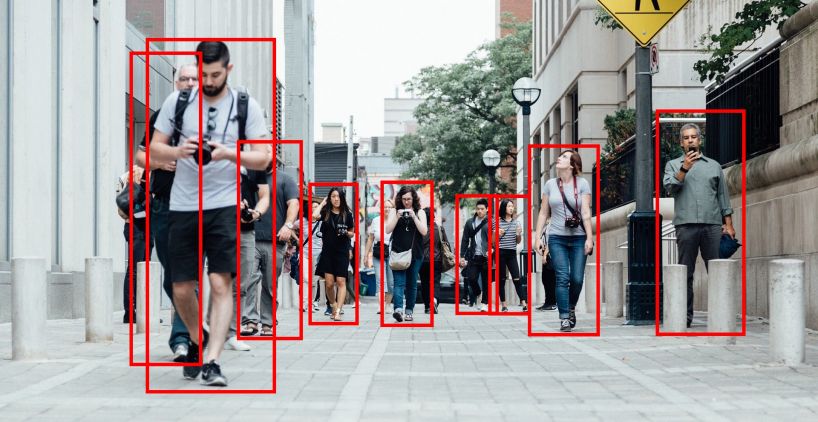

In Figure 1, we can see that detected people with a bounding box (a red rectangle outlining each people in an image) as output.

2. Segmentation approach:

It can be of two types:

(a) Semantic segmentation: In this method, we label each image based on the pixels with a corresponding class of what is represented. Every pixel belongs to either of the two classes (background or person). All the pixels belonging to a particular class highlighted in the same color.

In Figure 2, we can see that both persons represented with the same color mean the same class (Person), and the background in another color represents another class.

In Figure 3, we can see that all are of the same class(person) but have different colors representing different person instances.

Accuracy Metrics

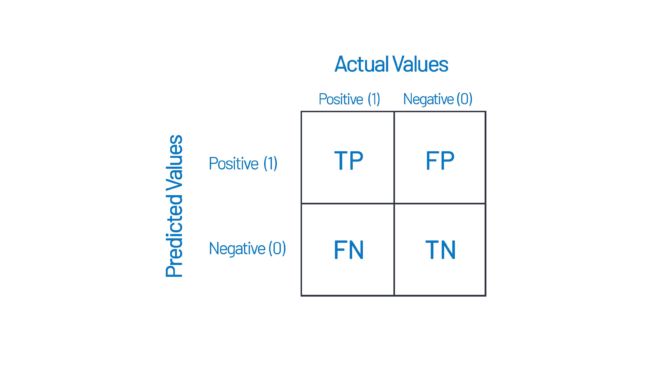

Terms Involved

- Ground Truth

- True positive; false positive; true negative; false negative

- Precision

- Recall

- IOU (Intersection over Union)

Now to put together the above terms concerning our target class Person.

Precision is a model’s ability to identify only the relevant objects, meaning it estimates how precise your prediction. It is the percentage of correct positive predictions and is given by:

Precision = TP / (TP+FP) = TP / (all detections) ——- eq1

A recall is a model’s ability to find all the relevant cases (all ground truth bounding boxes). In other terms, it determines how good you identify all the positives. It is the percentage of true positive detected among all relevant ground truths and is given by.

Recall = TP / (TP+FN) = TP / (all ground truths) ——- eq2

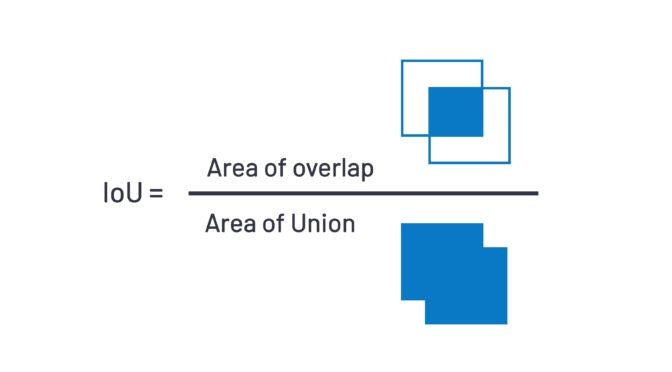

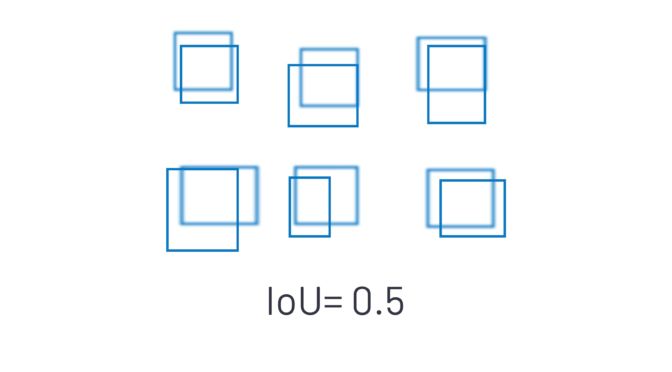

Intersection over Union (IOU) is a measure that evaluates the overlap between ground truth annotated bounding boxes and predicted bounding boxes. In a few datasets, we predetermine an IoU threshold in classifying whether the prediction is a true positive or a false positive.

IOU = Area of overlap / Area of union —— eq3

|

Rank (Confidence Score) |

True or False (IoU >= 0.5) |

Precision |

Recall |

|

1(0.99) |

True |

1.0 |

0.2 |

|

2(0.98) |

True |

1.0 |

0.4 |

|

3(0.96) |

True |

1.0 |

0.6 |

|

4(0.95) |

False |

0.75 |

0.6 |

|

5(0.94) |

True |

0.8 |

0.8 |

|

6(0.91) |

False |

0.66 |

0.8 |

|

7(0.90) |

False |

0.57 |

0.8 |

|

8(0.89) |

False |

0.5 |

0.8 |

|

9(0.88) |

False |

0.44 |

0.8 |

|

10(0.86) |

True |

0.5 |

1.0 |

|

11(0.75) |

– |

– |

– |

|

12(0.73) |

– |

– |

– |

|

13(0.68) |

– |

– |

– |

|

14(0.67) |

– |

– |

– |

|

15(0.65) |

– |

– |

– |

Precision = TP / (all detections) =>3 / 4 = 0.75

Recall = TP / (all ground truths) => 3 / 5 = 0.6

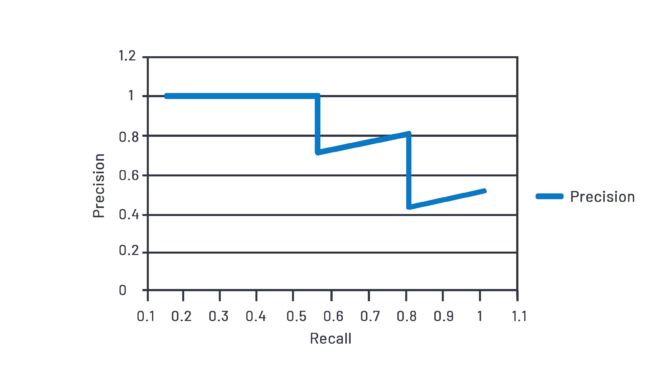

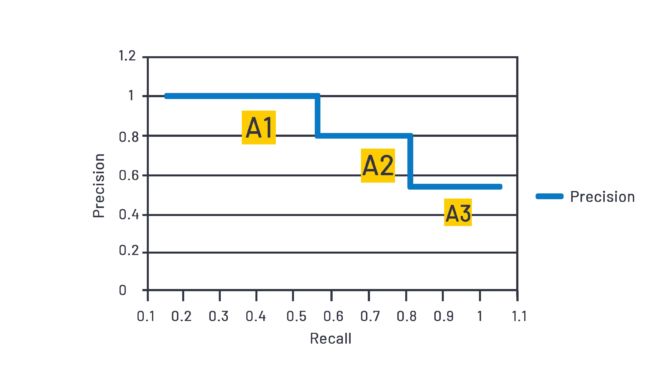

From the table, we can see that Recall values rise as we go down the prediction ranking. However, precision has a zig-zag pattern-it goes down when it encounters false positives and goes up when it meets true positives.

Average precision is the area under the precision-recall curve. There are three areas A1, A2, and A3 that we can see from the above graph to sum up, to get the AP.

A1 = (0.6-0.0) * (1) = 0.6

A2 = (0.8-0.6) * (0.8) = 0.16

A3 = (1-0.8) * (0.5) = 0.1

AP = A1 + A2 + A3

AP = 0.6 + 0.16 + 0.1 = 0.86

Mean Average Precision(mAP): The calculation of AP only involves one class. But in object detection, we can have more than one class. So, the Mean Average Precision is defined as the mean of AP across all classes. For people detection, we have only one class (i.e., person), so AP=mAP.

Metrics explained for different cases

- What would be the mAP value when Ground Truth (GT) and Prediction are the same?

- What would be the mAP if there is no match with GT?

- What would be the mAP if there are more FP (False Positives) in prediction in addition to predicting all GT (Ground Truth)?

- What is the minimum recommended IoU value for people detection mAP computation?

To discover more, we cheer you to check out our company website on https://visailabs.com/

VisAI Platform For Human/People Detection Solution

VisAI Labs has understood the need for robust edge optimized Human/people detection algorithms that can satisfy a wide range of use cases and comes pre-tested across various testing scenarios so that you could focus on building your product/solution.

VisAI Labs Human/People detection algorithms are optimized for People counting, Congestion detection, Dwell Time detection, Intrusion detection, Social distancing checker, and Tracking across buildings.

Feel free to reach us at sales@visailabs.com