4 questions this podcast will answer:

01

What are the different camera parameters you should define in your testing scenario?02

How to set up different for testing purposes?03

How is identifying the scale of a solution is imperative for choosing a testing scenario?04

Examples of how testing scenarios are set up?Relevant links:

Podcast Transcript:

Hi, this is Alphonse, and in today’s edition of unravelling computer vision and Edge AI for the real world, I’ll be talking about choosing the right testing scenario for your people deduction systems based on specific use cases.

First people deduction and tracking use cases are mainly found in the surveillance industry. at VisAI labs, we have also helped integrate people deduction systems into use cases in the ADAS which is I mean advanced driver assistance systems, sports entertainment, and also for industrial safety use cases

In each use case, the accuracy and speed requirements differ based on criticality.

For example, in an ADAS solution within an autonomous vehicle, the need to process on the fly or speed is a more critical factor, while at the same time accuracy also needs to be fab, meaning we can afford false positives to a certain extent, but false negatives should be near zero

So whilst looking into testing scenarios, it is important to note that the test scenario in is your actual target requirement simulating the use case conditions to the highest possible extent,

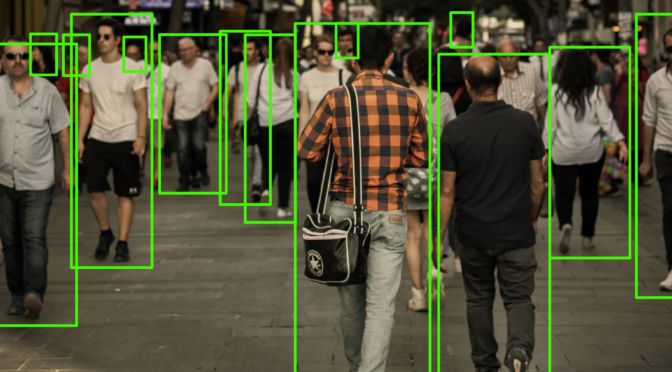

In general, these uh testing scenarios must be chosen to test the camera parameters, target environment, and scale.

Now, as first steps, the testing scenario must should test the both intrinsic and extrinsic camera parameters; intrinsic or internal parameters are the parameters that are internal to the camera, such as focal length and lens distortion.

We need to check the sensor size as well as its focal length in order to determine the number of pixels which will be occupied by a person on the image

Please note that these internal parameters and target scale are linked and then i’ll explain this later in the vlog

But in external parameters one of the more important uh parameters to check is the camera orientation or the camera post

This has an important bearing on the efficacy of your people deduction algorithm

If your algorithm is trained to work on a top view field of view then and the camera is placed on the front of a person then your algorithm accuracy will be very bad.

Now the second major parameter we have to look into is your target environment

let’s suppose you want to use a people reduction system in the context of for workforce safety and productivity monitoring at a construction site

In this scenario, the data set which you use to train your algorithm must be specific to the construction worker meaning your data set must consist of construction workers who might be wearing safety gears such as helmets, safety gloves, etc

And this might be difficult for a standard pedestrian detection algorithm to deduct. The same goes with the lighting conditions, attire in different, geographic locations etc.

So you need to keep in mind your target environment for your testing now. In addition to identifying the camera parameters and the parameters of the use cases environment, we also need to look into the scale of the deduction required before we need we start to identify the testing scenario.

So what scale?

scale uh simply put uh refers to the quantity and the range at which you know you want to deduct the people at the same time

Also, you need to choose the right testing scenario which closely identifies with the end-use case.

for example, if the use case is only for deducting larger objects, that is, objects closer to that camera they can face recognition, then the testing scenario should be just for the same and not for smaller objects

This target scale is an important factor to consider depending on your specific use case. you need to look at this user case in another way; suppose your target use case needs a wide field of view, then the objects will be smaller, but you might not get good test results if you have actually trained your model for those narrow field of view images right

So in this way, as I explained earlier in the podcast target scale is related to the camera parameter

So to test a testing scenario, we need to look into the camera parameters, target environment, and scale. You need to select multiple testing scenarios for each use case

This consumes time and energy from the engineering team where instead of building the business application logic, the team is focused building the machine learning algorithm

It’s just a waste of time for everyone concerned.

So at VisAI labs, we have actually built a series of tunable people detection algorithms which can help identify dwell time, congestion time, intrusion. people counting tracking etc, and has been pre-trusted across various scenarios such as indoor and outdoor as well as for various skills from people identification at the close range to mass density identification in concerts, malls, etc.

In addition to also being deployable in various types of edge processors and embedded cameras.

You can check out the link in the description to learn more about uh VisAI labs pre-built people detection solution and how it can fasten your Edge AI and computer vision product development.

So, in summary, you should select a testing scenario based on the particular camera environment and scale depending on the use case, and VisAI labs have already sorted out this problem using its set of pre-built solution.

This is Alphonse signing off and i’m looking forward to join you in another session of the podcast unraveling Edge ai and computer vision for the real world.

Ciao!