Summary

Written for:

Introduction

With Detection:

Without Detection:

- Online tracking,

- Offline Tracking.

Tracking Approaches

- Spatial Model

- Appearance Model

- Motion Model

- Interaction or Social Model

Background

Spatial Model

– IOU matching: IoU score is computed for the current detection with all the trackers. The best possible matching object is selected based on the highest IoU score.

– Distance thresholding: Distance computation is done between the current detection box center and other trackers objects, object with the least distance is considered the best match.

– Object size: The object’s size can also be considered for filtering multiple objects within the threshold limit.

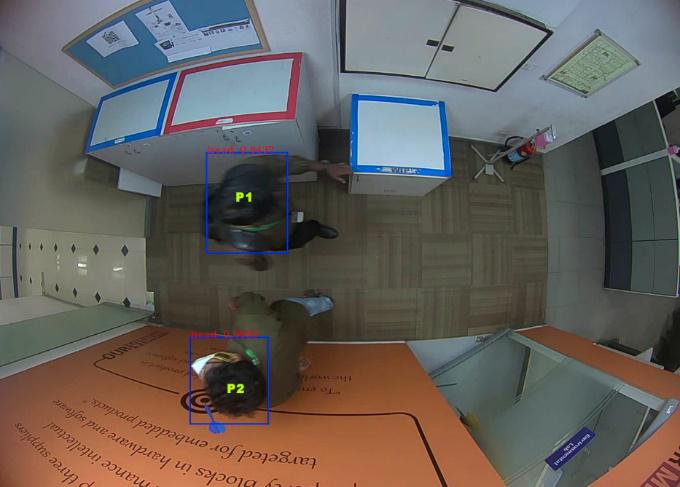

Figure 1: In the above figure, Frames 1 and 2 have two persons (P1 & P2) in the top view and moving in opposite directions. For the spatial model, distance thresholding P1 will be tracked as the person moves fast and the IoU overlap is zero. For the other person P2, you’ll get IoU overlap and be tracked based on both IoU and distance threshold.

Appearance Model

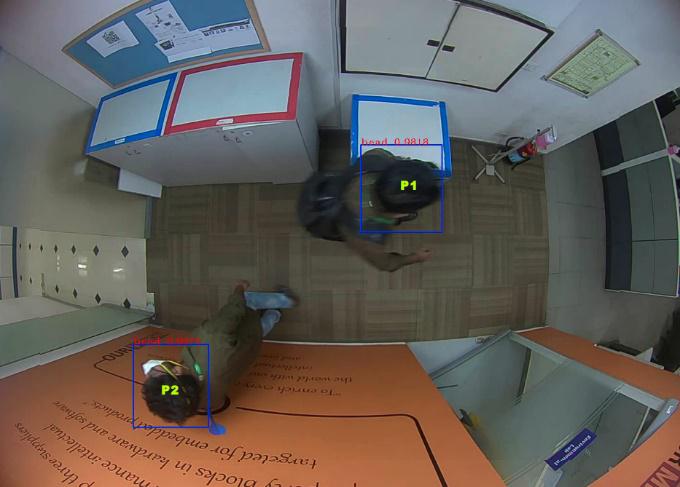

Figure 2: Here, the same person is detected in different frames; the appearance model works mainly based on the attire color. The limitation of the appearance model is that it’ll also consider the background color as well. If the detection bounding box is big, then the background might be influencing the decision, which may result in the wrong association of the person.

1. Correlation: Comparison of color data of the detection box with the trackers past set of data.

2. Histogram of colors: Comparison of color histogram data with trackers past set of data.

3. HOG (Histogram of oriented gradients): Histogram of oriented gradients can be used for shape comparison. However, performing this technique in real-time with multiple objects is computationally intensive.

Motion Model

1. Moving average to predict the next position: Compute the average x and y velocity for a period and predict the next position based on the average velocity. We can also introduce a weighted average by giving more weightage to the recent data rather than old data. Especially tracking people requires a weighted approach as the movement (velocity and acceleration) can vary frequently.

2. Kalman filter to predict next position: Estimate velocity and acceleration based on historical data. Kalman filter is good at removing noisy data and give an optimal estimate. More specifically, it filters out Gaussian noise, but the noise in a real-world environment is unpredictable. This becomes the limitation of the Kalman approach.

Interaction or Social Model

Conclusion

Is it difficult to integrate people tracking, detection, and recognition features into your products? Contact us for more information.

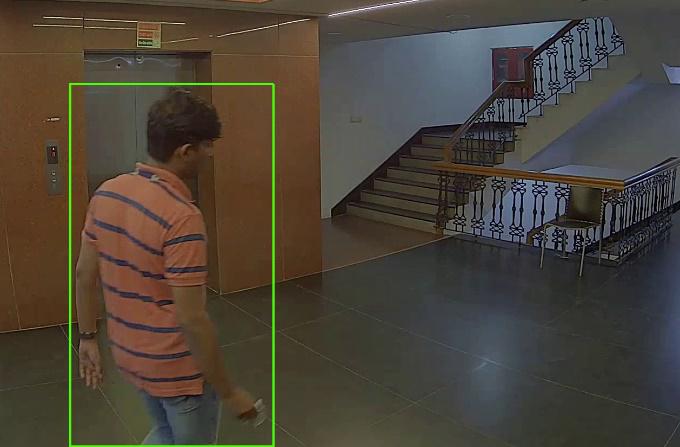

VisAI Platform – Human/People tracking and detection Solution

VisAI Labs understands the fundamental necessity of edge-optimized human/people tracking and detection algorithms that satisfy a variety of use-cases and are pre-tested in different testing scenarios so that you can focus on the development of your product/solution.

Feel free to reach us at sales@visailabs.com